Pivotal/Cloud Foundry

| (20 intermediate revisions by one user not shown) | |||

| Line 1: | Line 1: | ||

| − | [[Pivotal/Cloud_Foundry|Cloud Foundry]] | [[Pivotal/Cloud_Foundry/CLI|Cloud Foundry CLI]] | [[Pivotal/Cloud_Foundry/Apps|Apps]] | [[Pivotal/Cloud_Foundry/Tasks|Tasks]] | + | [[Pivotal/Cloud_Foundry|Cloud Foundry]] | [[Pivotal/Cloud_Foundry/CLI|Cloud Foundry CLI]] | [[Pivotal/Cloud_Foundry/Apps|Apps]] | [[Pivotal/Cloud_Foundry/Tasks|Tasks]] | [[Pivotal/Cloud_Foundry/Logs|Logs]] | [[Pivotal/Cloud_Foundry/OpsManager|OpsManager]] |

=Overview= | =Overview= | ||

Software Platform that lets you build and run software in a consistent way, in any place. | Software Platform that lets you build and run software in a consistent way, in any place. | ||

| Line 10: | Line 10: | ||

*VMWare | *VMWare | ||

Cloud Foundry builds out the infrastructure including the virtual machine and a hardened version of the operating system, Windows or Linux. | Cloud Foundry builds out the infrastructure including the virtual machine and a hardened version of the operating system, Windows or Linux. | ||

| + | <br/> | ||

| + | <br/> | ||

| + | The Operations Manager Login URL will be like: <code>https://{opsman_domain}/uaa/login</code> | ||

| + | <nowiki>ie. https://opsmgr-10.haas-59.pez.pivotal.io/uaa/login</nowiki> | ||

| + | The Pivotal Apps Manager Login URL will be like: <code>https://login.{system_domain}/login</code>. You can use the following credentials from the PAS tile to log in: <b>UAA > Admin</b> | ||

| + | <nowiki>ie. https://login.run-10.haas-59.pez.pivotal.io/login</nowiki> | ||

=Hierarchy= | =Hierarchy= | ||

| − | + | [[File:Screen_Shot_2018-11-12_at_11.02.33_AM.png|600px]]<br/><br/> | |

| + | [[File:Screen_Shot_2018-11-12_at_11.03.12_AM.png|1000px]] | ||

=Fundamental Concepts= | =Fundamental Concepts= | ||

| Line 64: | Line 71: | ||

=How Diego stages docker images= | =How Diego stages docker images= | ||

<img src="https://docs.cloudfoundry.org/concepts/images/docker_push_flow_diagram_diego.png" height="400" width="700"/> | <img src="https://docs.cloudfoundry.org/concepts/images/docker_push_flow_diagram_diego.png" height="400" width="700"/> | ||

| + | |||

| + | =High Availability= | ||

| + | ==Availability Zones== | ||

| + | Application instances are evenly distributed across availability zones. An Application will stay up despite losing an AZ. | ||

| + | ==Bosh Managed Processes== | ||

| + | Elastic runtime processes are monitored and automatically restarted. This is monitored by Monit. The event will be reported to the health monitor for further investigation. | ||

| + | ==Resurrector== | ||

| + | The Bosh agent continuously reports health of the VM/job. if the VM fails or is destroyed/deleted, it will be automatically recreated when the health monitor resurrector reports it to the BOSH director. | ||

| + | ==Self Healing Application Instances== | ||

| + | Once running, failed application instances will be recreated. The brain monitors application instances to verify that the desired state = actual state. When they do not match, the brain will begin the process of recreating the application instance. | ||

| + | |||

| + | =Startup Order= | ||

| + | |||

| + | <div class="toccolours mw-collapsible mw-collapsed"> | ||

| + | As of PAS 2.2, this is the startup order i've found for CF applications using <code>bosh manifest</code><br/> | ||

| + | consul_server > nats > nfs_server > mysql_proxy > mysql > diego_database > uaa > cloud_controller > ha_proxy > router > mysql_monitor > clock_global > cloud_controller_worker > diego_brain > diego_cell > loggregator_trafficcontroller > syslog_adapter > syslog_scheduler > doppler > credhub | ||

| + | <div class="mw-collapsible-content"> | ||

| + | *name: database | ||

| + | :instances: 0 | ||

| + | :serial: true | ||

| + | |||

| + | *name: blobstore | ||

| + | :instances: 0 | ||

| + | :serial: true | ||

| + | |||

| + | *name: control | ||

| + | :instances: 0 | ||

| + | :serial: true | ||

| + | |||

| + | *name: compute | ||

| + | :instances: 0 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">consul_server</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">nats</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">nfs_server</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">mysql_proxy</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">mysql</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: backup-prepare | ||

| + | :instances: 0 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">diego_database</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">uaa</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">cloud_controller</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">ha_proxy</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">router</span>''' | ||

| + | :instances: 1 | ||

| + | :serial: true | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">mysql_monitor</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">clock_global</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">cloud_controller_worker</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">diego_brain</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">diego_cell</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">loggregator_trafficcontroller</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">syslog_adapter</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">syslog_scheduler</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">doppler</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: tcp_router | ||

| + | :instances: 0 | ||

| + | |||

| + | *name: '''<span style="color:#ff0000">credhub</span>''' | ||

| + | :instances: 1 | ||

| + | |||

| + | *name: push-apps-manager | ||

| + | :instances: 0 | ||

| + | </div> | ||

| + | </div> | ||

| + | |||

| + | =Quick Reference= | ||

| + | ==Spring-App log level== | ||

| + | Doesn't really belong here, but i didn't want to make a new page for one small thing.<br> | ||

| + | To increase the level of logging for a spring app in a CF env, you can use the <code>set-env</code> like so: | ||

| + | <nowiki>cf set-env APP_NAME ENV_VAR_NAME ENV_VAR_VALUE | ||

| + | ie. ~$ cf set-env spring-music logging.level.root TRACE</nowiki> | ||

| + | Restart the app, then run logging | ||

| + | <nowiki>~$ cf logs spring-music</nowiki> | ||

Latest revision as of 16:02, 3 December 2018

Cloud Foundry | Cloud Foundry CLI | Apps | Tasks | Logs | OpsManager

Contents |

[edit] Overview

Software Platform that lets you build and run software in a consistent way, in any place.

Runs across any platform such as:

- Amazon Web Services

- Microsoft Azure

- Google Cloud Platform

- Open Stack

- VMWare

Cloud Foundry builds out the infrastructure including the virtual machine and a hardened version of the operating system, Windows or Linux.

The Operations Manager Login URL will be like: https://{opsman_domain}/uaa/login

ie. https://opsmgr-10.haas-59.pez.pivotal.io/uaa/login

The Pivotal Apps Manager Login URL will be like: https://login.{system_domain}/login. You can use the following credentials from the PAS tile to log in: UAA > Admin

ie. https://login.run-10.haas-59.pez.pivotal.io/login

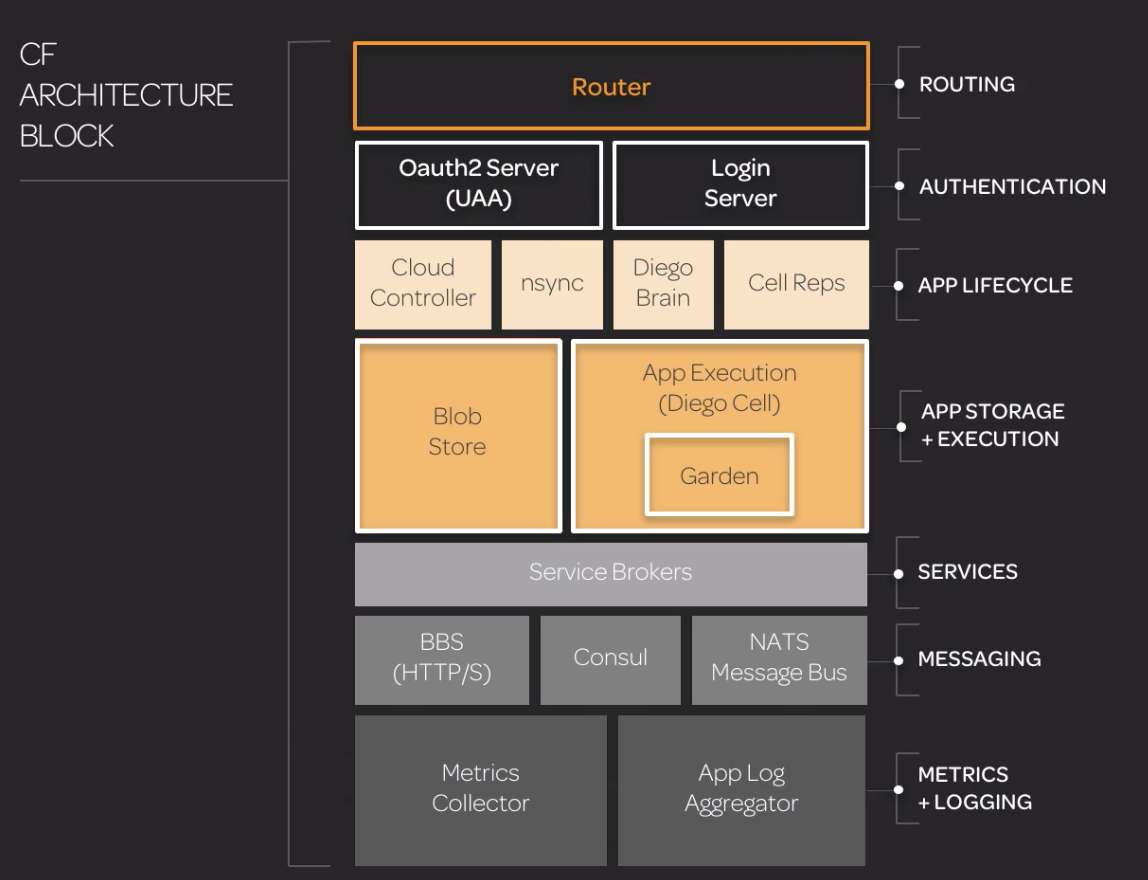

[edit] Hierarchy

[edit] Fundamental Concepts

[edit] Process Changes

- Distributed System

- Distributed systems are hard to build, test, manage, and scale.

- Ephemeral Infrastructure

- Virtual machines and containers are temporary

- Immutable Infrastructure

- Updates to systems and applications are not done in-place but rather new, updated instances are created instead.

[edit] Elastic Runtime (CF)

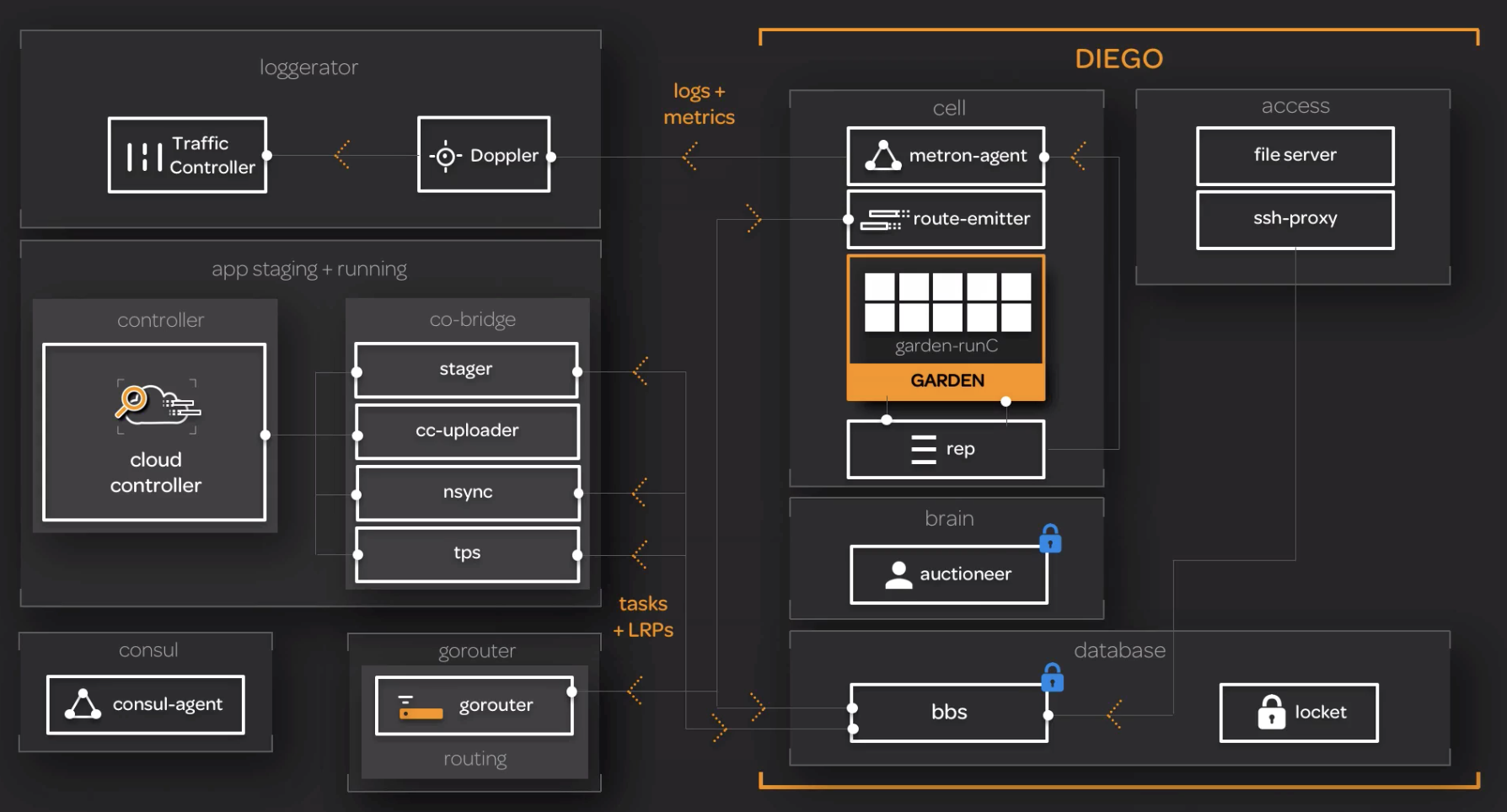

[edit] Diego

Schedules tasks and long-running processes (LRPs)

- Task - guaranteed to run at most once

eg. stage and application - LRP - a long running process, typically represented as a web app. lrps can have multiple instances.

- Container - An application instance is run within an immutable container

- Cell - Containers are run within a cell.

- Garden - Containers are managed by Garden.

- Rep - represents the cell in the BBS/auctions

- Auction - auctions are held to bid on executing a task or an LRP.

- Executor - Manages container allocations on the cell. Also streams

stdoutandstderrto Metron - Metron - Forwards logs to the loggregator subsystem

- BBS - Bulletin Board System is the API to access the Diego database for tasks and LRPs.

- Brain - The brain is composed of two components, the auctioneer which holds auctions for tasks and LRPs, and the Converger which reconciles desired LRPs vs Actual LRPs through auctions.

[edit] Loggregator

- Metron - Forwards logs to the loggregator subsystem

- Doppler - Gathers logs from Metron. also has app syslog drains to services like splunk or papertrail.

- Traffic Controller - Handles client requests for logs. Also exposes a web socket endpoint called the firehose.

- Firehose - A websocket endpoint that exposes app logs, container metrics, and ER component metrics.

- Nozzles - Consume the firehose output

[edit] Cloud Controller API

The Cloud Controller exposes an API for using and managing the Elastic Runtime (CF)

- Cloud Controller Database - The cloud controller persists org/space/app data in the cloud controller database

- Blob Store - The cloud controller persists app packages and droplets to the blob store.

- CC-Bridge - The CC-Bridge translates app specific messages into the generic language of tasks and LRPs.

[edit] Routing

The router routes traffic to appropriate components and all app instances.

The router round robins between application instances

[edit] Buildpacks

Buildpacks provide framework and runtime support for your applications. They build immutable droplets (stage your application).

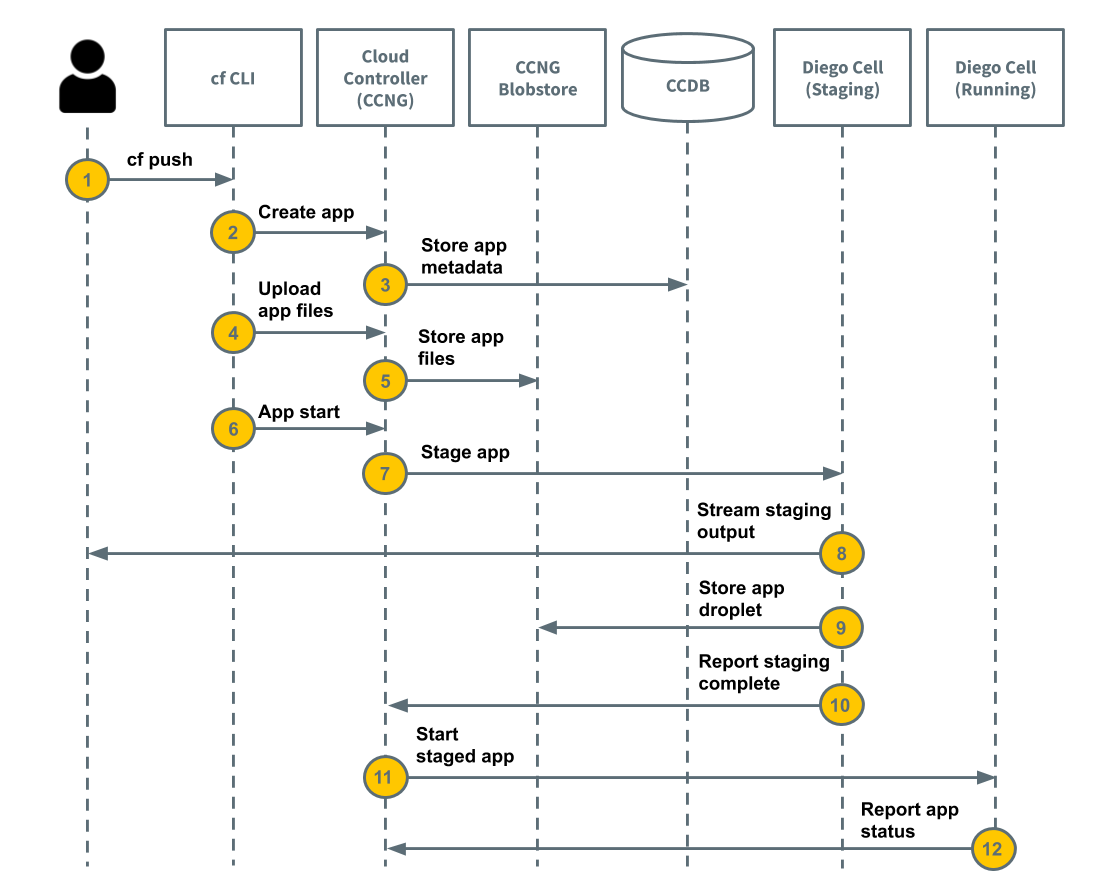

[edit] How Diego stages buildpack applications

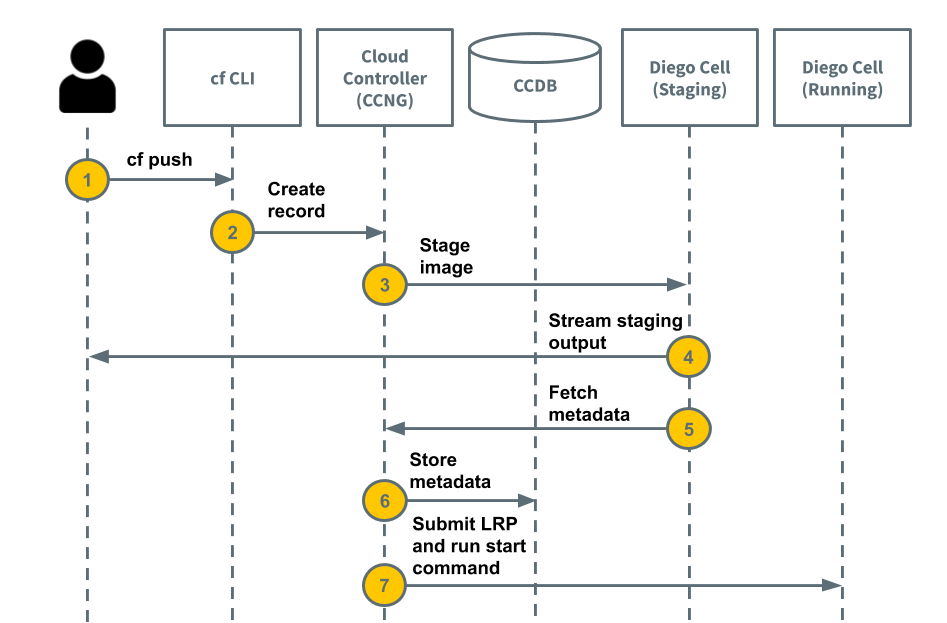

[edit] How Diego stages docker images

[edit] High Availability

[edit] Availability Zones

Application instances are evenly distributed across availability zones. An Application will stay up despite losing an AZ.

[edit] Bosh Managed Processes

Elastic runtime processes are monitored and automatically restarted. This is monitored by Monit. The event will be reported to the health monitor for further investigation.

[edit] Resurrector

The Bosh agent continuously reports health of the VM/job. if the VM fails or is destroyed/deleted, it will be automatically recreated when the health monitor resurrector reports it to the BOSH director.

[edit] Self Healing Application Instances

Once running, failed application instances will be recreated. The brain monitors application instances to verify that the desired state = actual state. When they do not match, the brain will begin the process of recreating the application instance.

[edit] Startup Order

As of PAS 2.2, this is the startup order i've found for CF applications using bosh manifest

consul_server > nats > nfs_server > mysql_proxy > mysql > diego_database > uaa > cloud_controller > ha_proxy > router > mysql_monitor > clock_global > cloud_controller_worker > diego_brain > diego_cell > loggregator_trafficcontroller > syslog_adapter > syslog_scheduler > doppler > credhub

- name: database

- instances: 0

- serial: true

- name: blobstore

- instances: 0

- serial: true

- name: control

- instances: 0

- serial: true

- name: compute

- instances: 0

- name: consul_server

- instances: 1

- serial: true

- name: nats

- instances: 1

- name: nfs_server

- instances: 1

- serial: true

- name: mysql_proxy

- instances: 1

- name: mysql

- instances: 1

- name: backup-prepare

- instances: 0

- name: diego_database

- instances: 1

- serial: true

- name: uaa

- instances: 1

- serial: true

- name: cloud_controller

- instances: 1

- serial: true

- name: ha_proxy

- instances: 1

- name: router

- instances: 1

- serial: true

- name: mysql_monitor

- instances: 1

- name: clock_global

- instances: 1

- name: cloud_controller_worker

- instances: 1

- name: diego_brain

- instances: 1

- name: diego_cell

- instances: 1

- name: loggregator_trafficcontroller

- instances: 1

- name: syslog_adapter

- instances: 1

- name: syslog_scheduler

- instances: 1

- name: doppler

- instances: 1

- name: tcp_router

- instances: 0

- name: credhub

- instances: 1

- name: push-apps-manager

- instances: 0

[edit] Quick Reference

[edit] Spring-App log level

Doesn't really belong here, but i didn't want to make a new page for one small thing.

To increase the level of logging for a spring app in a CF env, you can use the set-env like so:

cf set-env APP_NAME ENV_VAR_NAME ENV_VAR_VALUE ie. ~$ cf set-env spring-music logging.level.root TRACE

Restart the app, then run logging

~$ cf logs spring-music